When it comes to code, maintenance can be a troublesome creature. As projects grow in scope and size, so does the application codebase. As that growth progresses, it’s imperative to keep the codebase up to date and up to a certain level of quality. This problem is compounded further when dependency (module/plugin) updates break existing code. Skipping dependency updates is, of course, not recommended, so a focus on code quality via static code analysis and continuous integration (CI) is imperative.

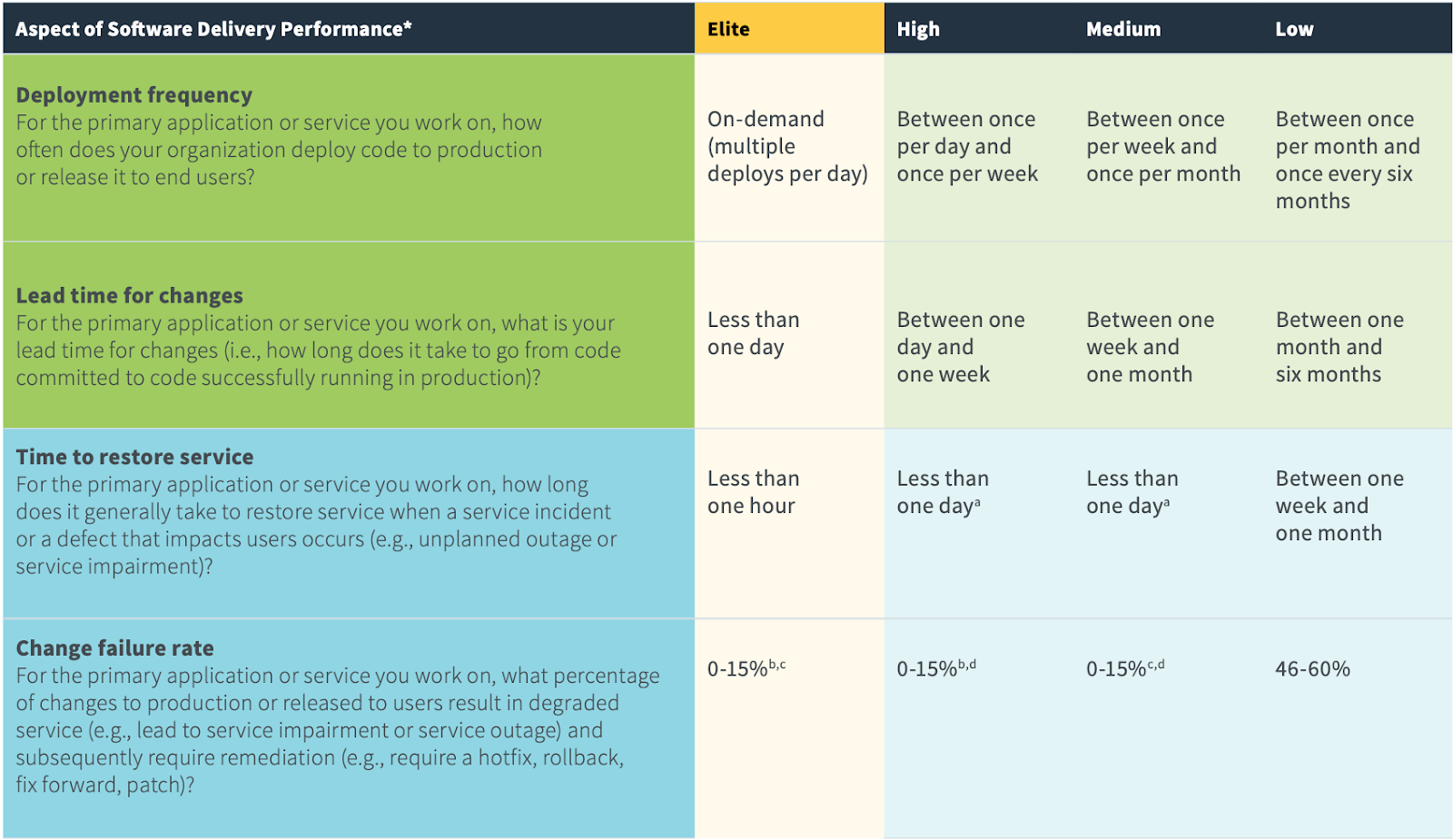

The development life cycle has shortened greatly over recent years. The Accelerate: State of DevOps 2019 report published by DORA (DevOps Research and Assessment) concluded that there is a strong correlation between software delivery performance and DevOps. The more integrated a system is, the more effective development can be. Clearly the implementation of CI and static analysis are vital stages in the maturity of any team’s software delivery performance.

At Engine Room we utilize Bitbucket for source control and their Pipelines offering for CI operations. We recently assessed two cloud-based products that offer development managers assurance around code quality - SonarCloud and Codacy.

SonarCloud

Developed by SonarSource, SonarCloud offers continuous code analysis through integrations on GitHub, Bitbucket, and Azure DevOps. Boasting of its ease of set-up, we jumped at the opportunity to trial it against some of our projects. Unfortunately, setting it up for Bitbucket was more troublesome than expected.

Bitbucket Pipelines

For CI, Bitbucket offers Pipelines. In a nutshell, Bitbucket loads your code onto a cloud container, and with the use of Pipelines, developers can deploy integrations seamlessly through the use of a YAML file. Conveniently, Bitbucket has a pipeline validator tool as part of their UI and each integration is referred to as a “pipe.” Using this tool, integrations can be built, tested, and deployed swiftly, or at least one would hope. One would think that integrating SonarCloud would be simple enough, given that SonarCloud provides a YAML snippet for just about any language. This is not the case. At the very least, it was not the case with our sample Drupal project we tested SonarCloud with. We have an example YAML file available so that you can get a feel for BitBucket Pipelines.

Example YAML File for SonarCloud:

image: php:7.1.1

clone:

depth: full # SonarCloud scanner needs the full history to assign issues properly

definitions:

caches:

sonar: ~/.sonar/cache # Caching SonarCloud artifacts will speed up your build

steps:

- step: &install-php-extensions

name: Install PHP Extensions

script:

# Installing first the libraries necessary to configure and install gd

- apt-get update && apt-get install -y libfreetype6-dev libjpeg62-turbo-dev libpng12-dev

# Now we can configure and install the extension

- docker-php-ext-configure gd --with-freetype-dir=/usr/include/ --with-jpeg-dir=/usr/include/

- docker-php-ext-install -j$(nproc) gd

- step: &install-composer

name: Install dependencies

caches:

- composer # See https://confluence.atlassian.com/bitbucket/caching-dependencies-895552876.html

script:

- apt-get update && apt-get install -y unzip

- curl -sS https://getcomposer.org/installer | php -- --install-dir=/usr/local/bin --filename=composer

- composer require monolog/monolog

- composer require phpunit/phpunit

- composer install

# - vendor/bin/phpunit

services:

- docker

- step: &build-test-sonarcloud

name: Build, test and analyze on SonarCloud

caches:

- sonar

- composer

size: 2x # Double Resources available for this step

script: # Build your project and run

- pipe: sonarsource/sonarcloud-scan:1.0.1

variables:

DEBUG: "true" #Optional

services:

- docker

- step: &check-quality-gate-sonarcloud

name: Check the Quality Gate on SonarCloud

script:

- pipe: sonarsource/sonarcloud-quality-gate:0.1.2

services:

docker:

memory: 7128

options:

size: 2x # Allocates twice the amount of memory globally

pipelines: # More info here: https://confluence.atlassian.com/bitbucket/configure-bitbucket-pipelines-yml-792298910.html

branches:

develop:

- step: *install-php-extensions

- step: *install-composer

- step: *build-test-sonarcloud

- step: *check-quality-gate-sonarcloud

pull-requests:

'**':

- step: *install-php-extensions

- step: *install-composer

- step: *build-test-sonarcloud

- step: *check-quality-gate-sonarcloud

The Problem

The codebase we tested was a standard (medium-sized) Drupal application written in PHP, and the Docker environment we set up took note of this. There were a number of dependencies for SonarCloud, the most important being Composer. By default, each step of the Bitbucket pipeline is allocated 4GB of memory and our first issue was that the scan took too much memory, so we were forced to split up the dependency installations into multiple steps. This did not resolve our issue.

What do you do when 4GB is not enough? Well, allocating more memory to the Docker environment did not work. Thankfully, Bitbucket allows you to increase the total memory available to 8GB by using “Size: 2x.”

It was only then that SonarCloud properly executed. The scan executed in about twelve minutes and the results produced were relevant and useful. Unfortunately, once the branch was merged, the scan failed to execute a second time on our development branch. This time the error was not related to memory or any other resource, but to the SonarCloud pricing plan. SonarCloud’s pricing model uses lines of code (LOC) as the metric. Since the first scan had already run on a child branch, code on the development branch was scanned as distinct from the branch the YAML file was merged from. Success was only secured by increasing the plan rate to cover a million LOC at a monthly rate of 250 Euros (approx $275).

SonarCloud is definitely a fantastic solution, and offers a free plan if your repos are public, but for our needs, it just didn’t hold up. Needing to analyze multiple private repos, we required a more cost effective approach.

The Solution: Codacy

Codacy automates code reviews and monitors code quality on every commit and pull request. It reports back the impact of every commit or pull request in new issues concerning code style, best practices, and security, allowing developers to save time in code reviews and tackle technical debt efficiently. Codacy analyzes your codebase off of the DevOps pipeline - no need for YAML Pipelines which could be reserved for continuous deployment initiatives - and we found its integration with Bitbucket to be a breeze. Codacy comes with pre-canned connectors to Bitbucket and Github out of the box and also integrates with Slack and Jira. It’s pricing model is per user per month, and for small teams (up to four users) there is no cost, even to scan private repositories. Large teams would need the Pro package, at $15 per user per month, which ends up being cost effective when you consider you can add multiple repositories without worrying about the total LOC count.

The automated code review that Codacy offers many advantages. Since the scan is run every time code is pushed up, issues show up almost immediately. These can range from security, code style, error prone, unused code, etc. This helps save time getting good quality code into the branch in less time. Additionally, the initial scan and Codacy dashboard provide great overall visibility into the level of a project’s technical debt. If you’re curious to find out more about Codacy, check out their website.

Looking for help with your website maintenance? Learn more about our website maintenance services.